It wasn’t that long ago when A/B testing vendors entered the market and anyone who wanted to run an experiment on their company’s website could do so. In 2010, VWO and Optimizely made their, what you see is what you get (WYSIWYG) interfaces available to the market, which marked an important year for the industry. Now, just about every company with decent traffic runs experiments on their websites, and the need for skilled practitioners has never been higher.

Of course, the past 11 years didn’t pass without its challenges, but marketers who really saw the value in creating an experimentation program — arguably more importantly, a digital experience optimization culture — within their organization have started to bear fruit as they continue to separate themselves from their lesser sophisticated competition. Did it happen overnight? No. But over the last seven years, companies that have created data-driven cultures are leveraging experimentation to strategically reduce risk while improving growth at a faster pace than their foe.

So why do some companies seem to have more mature, data driven digital experience optimization (DXO) programs? And how are they running hundreds or even thousands of optimization experiments each year? Or more to the point, is your digital experience optimization experimentation program not producing high-quality ideas or simply inefficient?

I’m not sure there’s one reason or even a set of “best practices.” I know, that’s not what you want to hear, but every business program is different and requires its own frameworks to succeed.

The Shift to Digital and the Digital Juggernauts

After all, more and more shopping is shifting to digital, and experimentation is more important now as ever. In fact, according to a 2021 EY survey posted by Harvard Business Review, 60% of U.S. consumers are currently visiting brick-and-mortar stores less than before the pandemic, and 43% shop more often online for products they would have previously bought in store. That’s true for me as well; I order all of my groceries online. Shifts from offline to online happened almost overnight, and while EY also found that 80% of U.S. consumers are still changing the way they shop, many believe the shift is fairly permanent.

60% of U.S. consumers are currently visiting brick-and-mortar stores less than before the pandemic, and 43% shop more often online for products they would have previously bought in store.

While all companies are adjusting to this new norm and preparing for the future of digital, more and more companies are incorporating experimentation as part of a digital experience optimization program within their overall business strategies.

But for industry leaders such as Amazon, Netflix, and Booking.com, digital experimentation has been core to their culture; they’re the pioneers who saw the value years ago. Perhaps one of the more brilliant takeaways is that these companies were visionaries. They saw everything as an opportunity to test because testing, when done right, can yield large payoffs and helps develop a competitive edge in the marketplace. These companies are examples for how best-in-class digital experience optimization programs should operate, yet for the masses, that’s almost never the case.

Booking.com has one of the most fascinating digital experience optimization cultures. If you’ve been in the industry a while, you’ve probably read about them. Stefan Thomke, Harvard Business School professor, reports that Booking.com runs more than 1,000 experiments simultaneously and an estimated 25,000 tests per year.

And while there are a lot of reasons for why they’re able to run so many tests every year, they’ve developed digital experience frameworks that democratize the process and embed it into the fabric of their culture. So much so that anyone within their organization, such as a Director of Design, can think of a radical optimization experiment and have it in production and live within a couple of hours.

I find this level of curiosity about systems and frameworks required to run a digital experience optimization program this way fascinating. Of course, Booking.com is an outlier when it comes to digital experience optimization program maturity and sophistication, but there are lessons to be learned from programs, such as this one: Ask yourself, “How can I experiment more than I am today?” Or “How can I create more efficiency in my DXO experimentation program so that we can test higher quality hypotheses?”

The digital experience optimization framework below is really meant for programs that are asking productive questions like these. It’s also for those with a lot of traffic to experiment on but currently dealing with an inefficient workflow. Think of it as an agile framework, different from what you may be accustomed to — one that’s meant to enable higher quality ideas and facilitate a more efficient prioritization process.

Develop an Intake Framework that Fosters High-Quality Test Ideas

While all digital experience optimization programs should first define business objectives and key performance indicators (KPI), as well as where to test and with what audience before DXO experimentation begins, this part of the process is often neglected. Most of the time, e-commerce sites will optimize for conversion or revenue, business-to-business (B2B) companies for MQLs or signups, and so on. Nine times out of 10, they’re only focused on one, two, or maybe three metrics at a time and assume that all experiments should improve this shortlist of metrics. The business thinks about where to test and on what audience the same way.

Groupthink can be restrictive and counterproductive. It limits creative problem solving and reduces the number of effective experiments that you can run for your business.

Instead, develop guardrails around KPIs, audiences, and pages so that your business feels empowered to be creative about how they problem solve through experimentation. While developing guardrails sounds counterproductive, it’s actually freeing because what you’ve just done is give people permission to test in ways they wouldn’t have thought of before. Give them 10 metrics to improve instead of two. Allow them to run experiments on five more pages because they support current business objectives. Define three more audience groups, beyond desktop and mobile, that are important cohorts for your digital experience optimization program.

By giving permission to test differently (within reason), people are more likely to explore other creative avenues than what they may have previously thought possible. Take painting, for example. If you’re not accustomed to painting, then it might be intimidating to try. But if you’re given the canvas, the acrylics, and the paintbrush, you’re much more likely to give it a whirl. The point is that we don’t do things differently when we’re not comfortable doing “that thing” or when it doesn’t come naturally to us. So, make it seem natural.

Also, by making these guardrails a little restrictive, it eliminates the wild ideas that have nothing to do with the goals of the DXO program or ones that don’t align with KPIs, audiences, or pages.

Ultimately, this will save the digital experience optimization program time and money, while increasing efficiency and volume of quality tests over the long run.

Seeing Is Believing

Take this fictitious wireless company for example: The top priority is to increase online wireless device revenue by 5%, year-over-year (YOY). By clearly stating this is the number one priority of the DXO program, it gives business units that want to run experiments a goal to focus on. And by listing out exactly which metrics, audiences, and pages are approved, it gives everyone just enough leash to experiment freely while also holding them accountable.

By developing and operationalizing the digital experience optimization framework above, you should start to see more test ideas make their way through to the program. By reframing criteria, stakeholders should now have more creative, high-quality ideas for testing.

It’s also worth noting, with the above digital experience optimization framework, that you might not start with research (not formally, at least), so there isn’t a “one and done” backlog of ideas generated after conducting weeks of research and gathering insights. In this DXO framework, you’re placing a higher importance on institutional knowledge and speed, not precision, which’ll keep your backlog full at all times.

If you think your organization could benefit from a digital experience optimization framework like this, visit these Optimizely pages for a primer on how to explore different experimentation metrics and how to build a goal tree for your business. These are great resources if you’re looking to get started.

Develop a Prioritization Framework to Regain Program Efficiency

With your backlog now filling up with quality ideas, you’ve got to start managing and prioritizing. As with any experimentation program — unless, maybe, you’re Booking.com — this is important, but the difference is in how you prioritize.

You must eliminate the need to grease squeaky wheels and avoid fulfilling emotional requests because, over time, these will decrease the overall health of your digital experience optimization program. It’s one of the benefits of having a prioritization framework in the first place. And now by having your guardrails in place, you’re spending your time on only high-quality requests while ignoring those that do not meet program requirements.

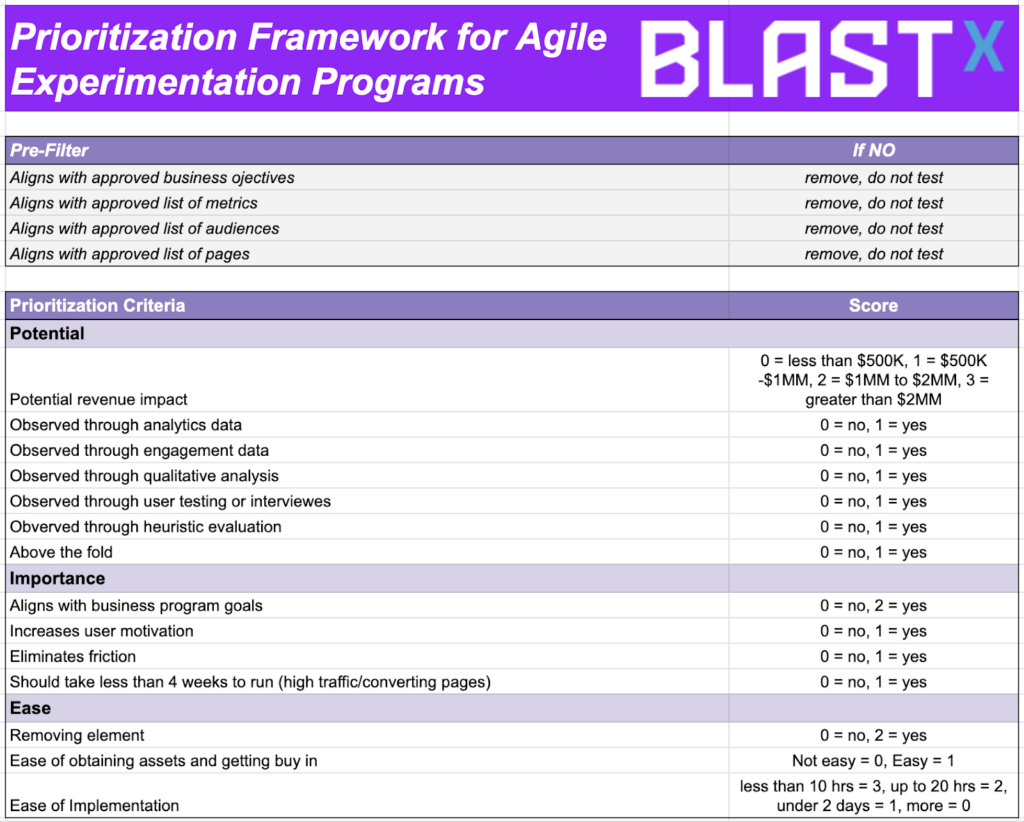

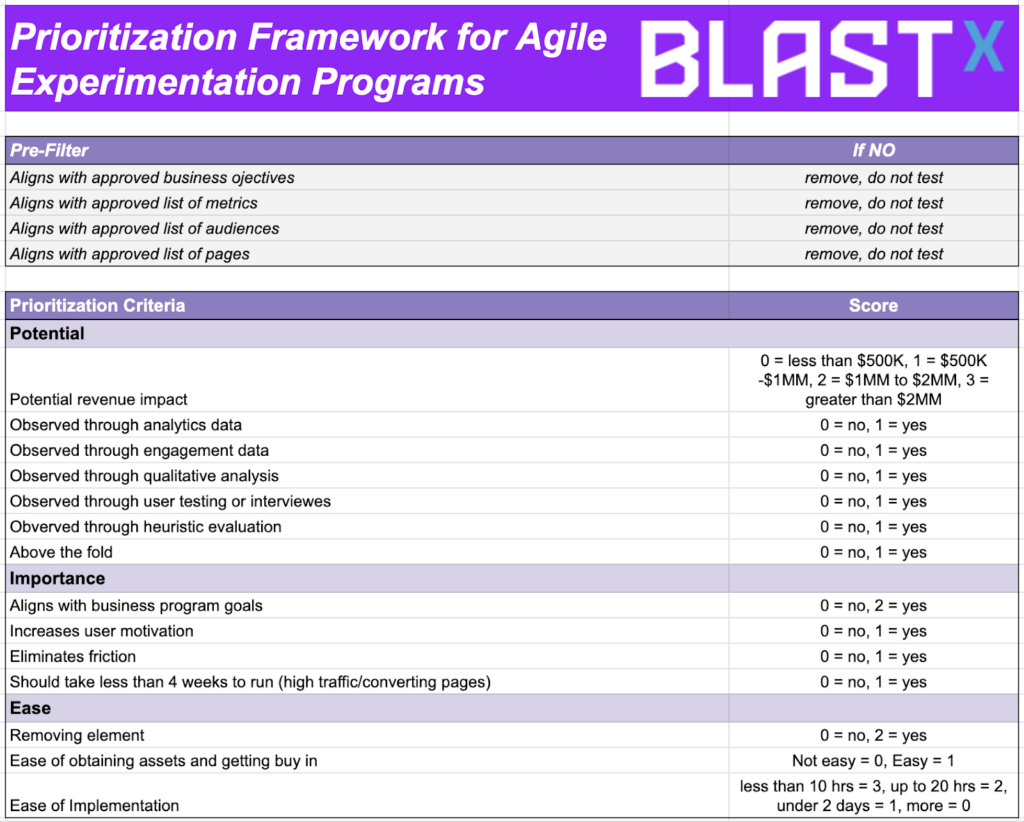

If you don’t have a good prioritization framework, or if your backlog is a mile deep, consider customizing the Potential, Importance, Ease (PIE) framework to fit your needs, as I did below.

As you can see, the first step is weeding out ideas that don’t align with your program’s criteria. Those are removed completely. Next is to define evaluation criteria and assign weight to ones that are more important. If, for example, sales are most important, give ideas with higher revenue potential more weight. Or, if one of your DXO program goals is to increase your testing velocity, then give ideas that have a low level of effort more weight.

It’s all relative to your program goals, so customize the one I’ve started to fit your culture of experimentation.

The key is having an established framework for prioritizing requests. The organization must know your priorities and the framework you’ve created so that you can most effectively manage your digital experience optimization program.

Getting Started With Two New Frameworks

While not all companies can be blessed with copious amounts of traffic, they can all benefit from small process improvement. The digital experience optimization programs we read about today, from companies such as Amazon, Netflix, and Booking.com, didn’t have it all figured out on day one. They iterated and improved their process to become the efficient machines that they’ve become.

As Vincent Van Gough once said, “Great things are done by a series of small things brought together.” Take Van Gough’s advice and focus on the small things once in a while. Consider creating agile, digital experience optimization frameworks like these to improve the quality of ideas flowing through your program and to facilitate a more efficient prioritization process. Maybe one day your company will be the one we all talk about.