Is your brand currently redesigning its digital experience? If not, chances are you will be within the next couple of years. But before you do, keep reading to see why redesigns often lead to lackluster results or worse—performance declines.

We’ve all heard horror stories about failed relaunches and secretly counted our lucky stars that these failures weren’t our own. In 2008, Snapchat rolled out their redesign, and their daily users subsequently dropped by 2%—from 193M to 188M. Marks & Spencer reportedly spent £150M and 2 years developing their new website, only to watch online sales tank by 8.1% in the first quarter after the launch. Right before I joined Target.com in 2011, their redesign resulted in revenue declines and a multitude of technical issues which plagued our team for the next couple of years.

Does all of this mean that you shouldn’t redesign your digital experience? Not at all—but a redesign can be quite an expensive endeavor, and you don’t want to make headlines for getting it wrong.

Radical Redesign or Continuous Optimization? Deciding What’s Right for Your Brand

There are a number of good reasons why your organization might want to undertake a radical redesign.

Here are a few (but not all) reasons for a radical redesign:

- Your website design is outdated and doesn’t create a good impression on visitors.

- You’ve tried optimizing your website and have hit a local maxima. The only way to improve performance through design is by making bold changes.

- Your site doesn’t get enough traffic to improve performance through optimization.

- Site speed/performance is slow, and the site was developed without relatively new UX factors in mind, like Google’s Core Web Vitals.

Although these are good reasons for undergoing a complete digital experience redesign, they are not the reasons why I typically see redesigns taking place. In fact, in many cases, redesign projects lack important quantitative and qualitative rationale.

If that hits close to home, read on: this guide provides a framework for hypothesis testing through a site redesign which will (hopefully) save you money and headaches in the future.

But before we continue, let’s look at some reasons why a radical redesign might not be a good idea for your organization at all.

Why a radical redesign may not be a good idea:

- When the HIPPO (highest paid person’s opinion) just wants something new or when the idea of a site redesign is rooted in a gut decision.

- When you’ve got a case of New Shiny Object syndrome or when you “need” your website to look similar to your competitor’s new site.

- When you haven’t done extensive user and behavioral research.

- Because customers or users say that it’s a good idea. In 2008, Walmart customers said that they would like less cluttered stores. When the store redesigns were completed, customers loved it—but it resulted in an estimated $1.85 billion dollars in lost sales revenue. People are predictably irrational and poor at making predictions about their own behaviors.

In fact, there’s a high probability that performance won’t be what you hoped it would be. According to a Hubspot report, one third of marketers surveyed were unhappy with their results. That’s a pretty unfortunate probability.

Perhaps more important—and really the crux of this article—is that, with a radical redesign, you won’t know which elements are having a positive or negative impact on performance. What most don’t think about is that radical redesigns mask the effect of changes across a digital experience.

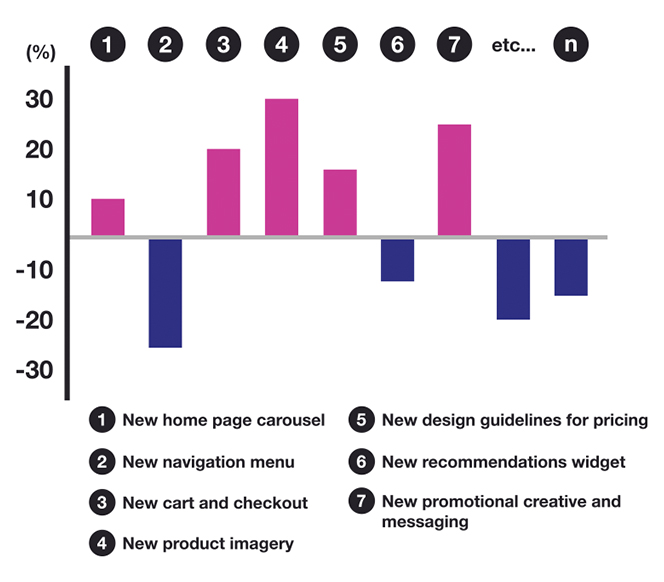

For example, suppose you made several design changes across pages during a new website redesign (see illustration below). Some changes may have a positive impact on performance, and some may have a negative impact. How will you know which are positive and which are negative? Unfortunately, you won’t, which is why you should avoid them and instead consider Continuous Optimization.

Think of your website as an organism. Continuous Optimization allows your website (the organism) to evolve into a better version of itself over time. Rather than changing many things at once, Continuous Optimization allows you to test and roll out new design features in a systematic way. This will save your business money in the long run, and will reduce the risk of launching features that don’t have a net positive impact on the user experience or important business metrics.

If that makes sense and you’d like to explore how to approach Continuous Optimization, read on for a simple framework to follow.

Step 1: Identify Problem Statements

I’ve written about how heuristics are a great way to identify problem statements, but that was specific to UX and a systems thinking approach to the digital experience. A Continuous Optimization framework involves more research and an understanding of specific business challenges (not just UX challenges) that can stall digital performance.

The first step in Continuous Optimization when undertaking a site redesign is to break down the higher-level business problems you’d like to address. These problems aren’t opinion-based (that would fall under the category of reasons not to undertake a redesign); they are data-driven business problems that are informed by quantitative and qualitative research.

Here are a few examples of problem statements:

Problem Statement 1: It isn’t clear how users will sign up or why they should do so.

Problem Statement 2: Savings are inconsistently communicated across funnel pages.

Problem Statement 3: Unique product benefits are hidden or misunderstood across the path to purchase.

Keep in mind that problem statements describe a specific business problem or desired outcome. There can be many different problem statements that you want to optimize for, but this can’t be done if you launch a full site redesign. Radical redesigns are short-term solutions to a generic problem, and reasons for the resulting performance outcomes cannot be fully understood. By addressing individual problem statements through Continuous Optimization, you can organically solve for a complex web of issues and develop a better understanding of them along the way.

Step 2: Create Hypotheses for Each Problem Statement

For each problem statement, you might develop ten or twenty individual hypotheses. That doesn’t mean that you have to test all of them, but this creative exercise is important for exploration and prioritization later in the process.

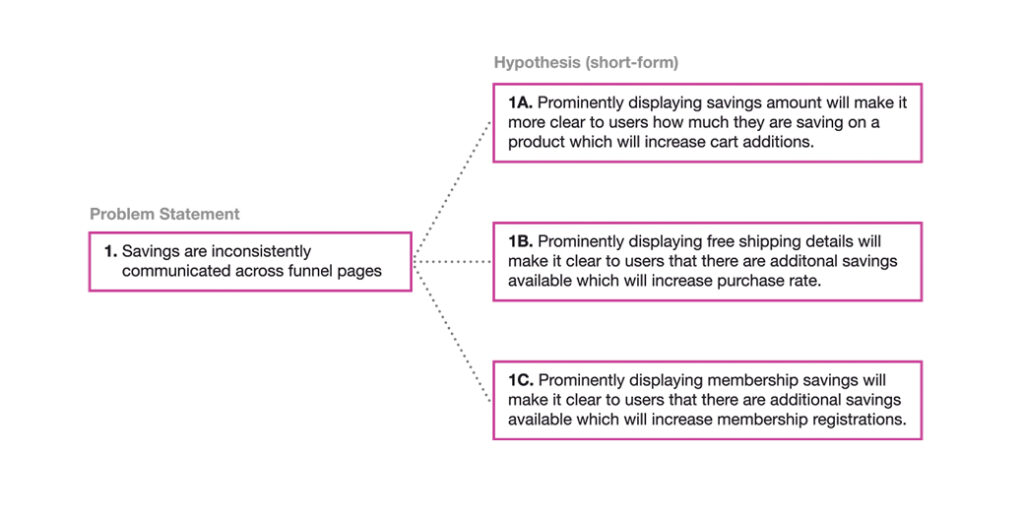

I always think of this exercise like the branches of a tree, so a mind map is a helpful visual tool for explanation:

Notice that hypotheses are not the same as a variant of an element (ie. red button, blue button, green button). You’ll start developing different variants for testing later. Instead, we’ve created informed hypotheses that provide us with different exploratory directions—potential solutions— for addressing the same problem statement. And notice how each hypothesis is intended to impact a different metric: this provides flexibility when business metrics change throughout an evolutionary design but the problem statement is still relevant.

PRO TIP: If you’ve never developed a hypothesis before, just remember: IF, THEN, BECAUSE:

IF we were to do X, THEN we’d expect Y effect, BECAUSE of some rationale (hopefully informed by data).

If you’ve read this far, you should see a framework start to emerge as an aid for the design team. Remember, most designers are not informed by data—so when you go to them asking for a new digital experience design, having fleshed-out problem statements and hypotheses that you’d like to explore through Continuous Optimization will give them a helpful set of very specific boundaries to design within.

Step 3: Prioritize Hypotheses

The next step is pretty straightforward: to prioritize your hypotheses according to whatever prioritization framework you have developed internally.

If you need some inspiration, here are Two Digital Experience Optimization Frameworks To Ensure Success. In that article, you’ll learn how companies like Amazon, Netflix, and Booking.com have become industry leaders while running thousands of experiments simultaneously. More importantly, you’ll learn how to develop two digital optimization frameworks that will improve the quality of ideas flowing through your program and facilitate a more efficient prioritization process.

Develop Variants for Testing

The next step is to create actual variants for testing. Again, treat this like a creative exercise: develop as many variants as you can within the boundaries of your hypotheses. Some will get tested, and others will not—but documenting these will help you see the big picture and prioritize later.

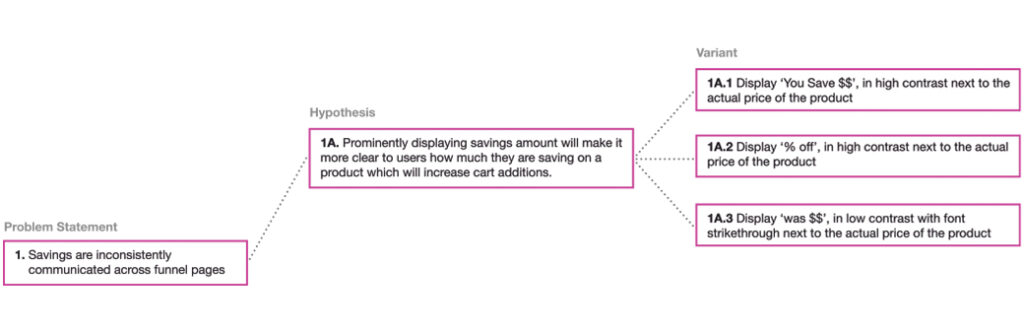

To simplify this illustration, let’s look at just one of our hypotheses. You’ll notice that I’ve created three separate variants, each of which are described slightly differently. I’ve also separated specific types of pricing context for testing: savings amount, savings percentage, and the old price. All three elements describe the hypothesis in different ways. Notice that I used the terms “high contrast” and “low contrast” instead of specific RGB values. This was an intentional decision made to allow the designers some creative latitude.

When Creating Variants, Consider These Three Nouns

While your creative approach to evolutionary design may vary, it’s worth considering the following.

When developing individual variants, consider an element’s existence, quality and location. You don’t have to test them in that order, but it’s generally a good idea to test the existence of an element—like a widget or imagery—because maintaining them can sometimes require a high LOE (level of effort). If your test performs well without something that requires a lot of effort, this is not only a better user experience; it also reduces labor and therefore cost required to produce and maintain that element.

Let’s explore all three in a bit more detail.

Existence: The literal existence of an element on a page or throughout the digital experience. If your test variant removes an element from the design and it increases performance, you’re reducing the cost of maintaining this element in the future. This is the best-case scenario when considering LOE, so it’s always a good idea to consider starting with the removal of an element before experimenting with the location or quality.

Location: The location of an element (e.g. moving from the bottom of the page to the top) is also a good low-LOE consideration because the asset might already be created—you’re just moving it around on the page with some JavaScript.

Quality: If possible, consider the quality of an element last. If you do, you’ve already tested whether the existence and the location of an element (both low LOE) have an impact. If they do, then further exploring more variants of that element makes sense. The quality of an image might be described by size, style, or kind of image (e.g. commercial vs. lifestyle). You might also describe the quality based on how text is displayed—by color, weight, font family, size and so on. Sometimes quality requires the highest LOE because you’re changing the appearance of an element or creating a new element from scratch—though this always isn’t the case.

Notice that I didn’t name specific design elements like styles or colors in the above variants. After creating the initial variants and working with your design team, these variants can be further updated with more specific design recommendations.

Step 5: Determine Your Approach to Testing

Now that you have your prioritized list of hypotheses and variants for testing, you’ll need to consider how you want to prioritize them in a Continuous Optimization roadmap.

Unless you’re using an AI tool like Evolv AI or Intellimize, you’re likely A/B testing—so consider one hypothesis at a time, or just a couple of closely related hypotheses (themes are described in more detail below). Otherwise, you won’t know which is having a positive or negative impact on performance, which is the issue we’re trying to avoid in the first place.

Regardless of whether you’re doing Continuous Optimization on a single page or across multi-pages, there are generally three ways you might approach hypothesis testing. Let’s look at each one. Note that there are pros and cons to each method, so you’ll have to assess your risk tolerance as you plan for Continuous Optimization.

Umbrella Testing

This is basically a radical redesign test that allows for testing multiple hypotheses by changing many elements on a page in an A/B test. Generally, I only recommend this if you can answer yes to any of the four “good” reasons for a radical redesign as listed above—like when traffic is too low to improve through optimization.

Pros:

- Can quickly understand if design B outperforms design A

Cons

- Will not know which specific elements caused positive or negative change

- Extremely high LOE and risk

- High cost associated, no understanding of ROI

- Design rollout is slow

- Generally, big changes to user experience are shocking to returning users, and effects are not fully understood over longer periods of time

Facet Testing

Facet testing is great when you want to hone in on one specific component of a site or page. In other words, facet testing is ideal when you choose a hypothesis and want to test multiple variations of the same element (e.g. a button). This style of testing isn’t very productive at the beginning stages of Continuous Optimization because you won’t yet have explored enough hypotheses to know which are having a greater impact.

Pros:

- Will know exactly which element has a positive or negative impact

- A single change has the potential to be less complex, reducing technical risks associated with an individual test

- ‘Quick wins’ are more achievable than with other, more complex alternatives

Cons

- Will take a long time to test all of your individual variants

- Without having explored other hypotheses first, testing different variants of the same hypothesis can be suboptimal and may slow down your pace of learning and evolutionary design

Thematic Testing

This approach entails grouping and testing more than one element at a time. Because most only have the traffic for A/B testing, it’s a good idea to take advantage of your traffic when possible—and the best way to do that is to group elements into themes under the same or similar hypotheses. However, this depends on your comfort level.

For example, you could group all three of the variants illustrated in the above example. You could also group with other variants under other hypotheses but again, that depends on your own level of comfort and ability to take on risk. When grouping variants across different hypotheses, always ask this question: What will I do next if I don’t know which variants are causing the observed change in performance?

Pros:

- Ability to test more rapidly than with facet testing

- Ability to explore different hypotheses more quickly

- Less risk involved than with umbrella testing

- More flexible than facet testing for multi-page testing

Cons

- May not know which specific elements cause positive or negative change (dependent on your risk tolerance and on how many elements you’re testing)

- Possibly a higher LOE than facet testing

Getting Started with Continuous Optimization

If your company is thinking about undertaking a radical redesign in the coming months, consider Continuous Optimization instead. Continuous Optimization allows you to address specific business problems by way of hypothesis testing, which will not only produce a higher-performing digital experience over time but also greatly reduce risks associated with a large redesign rollout.

Don’t make the news for all of the wrong reasons.

If you’d like to get started, contact us. BlastX is here to guide you through the redesign process while improving performance metrics, not hurting them. We’re happy to discuss whether our Continuous Optimization framework is right for your business.